AB Testing

Between two designs for a single task, which is the better option?

Context

My group and I were tasked with designing two different iterations for a single

interface and then determining which of the two was better for a given task.

The task we chose as a metric was: "remove all of the items from the cart." While this metric

was simple, we felt that this function was extremely important to the experience of the interface.

Research and Design

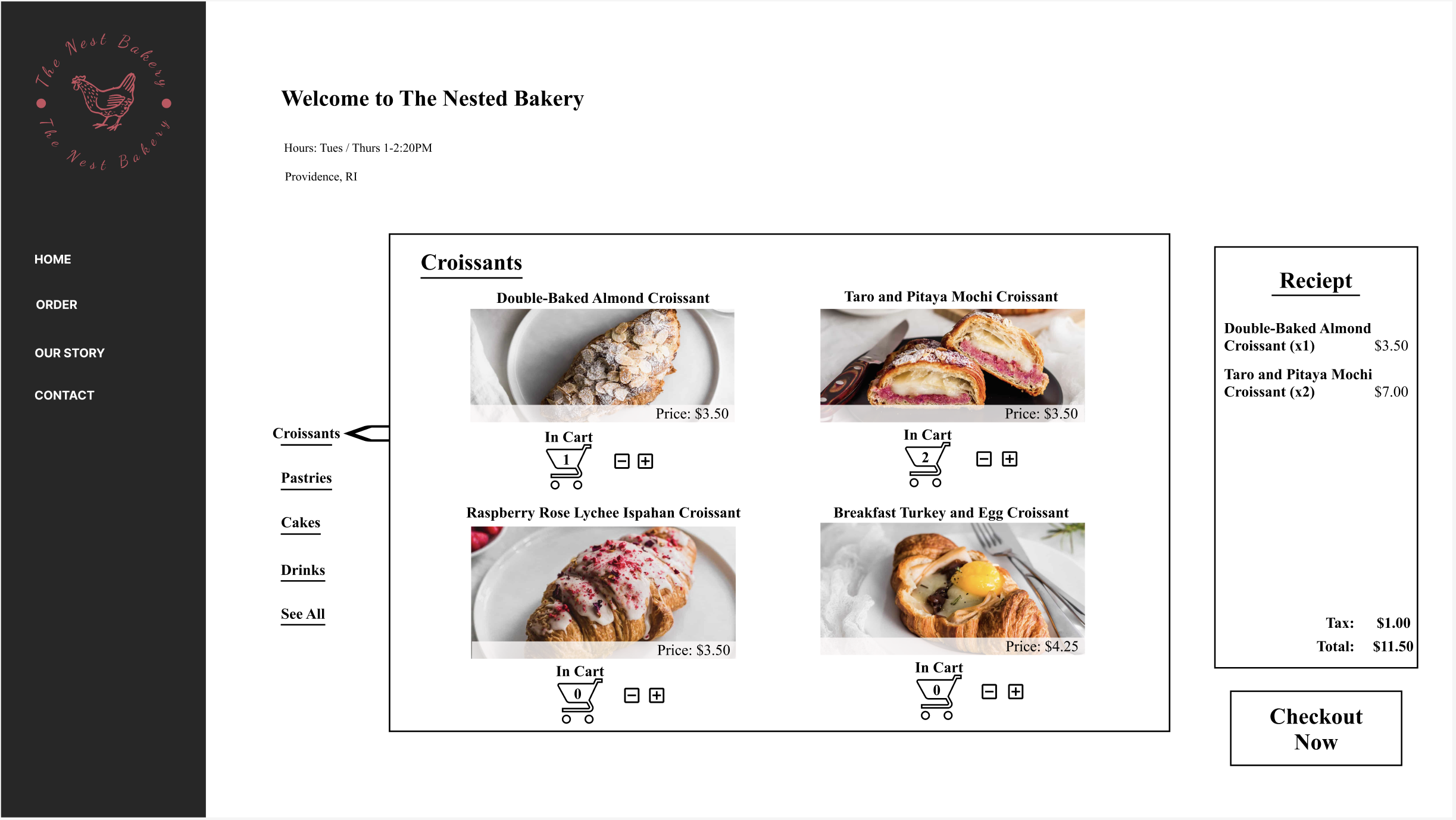

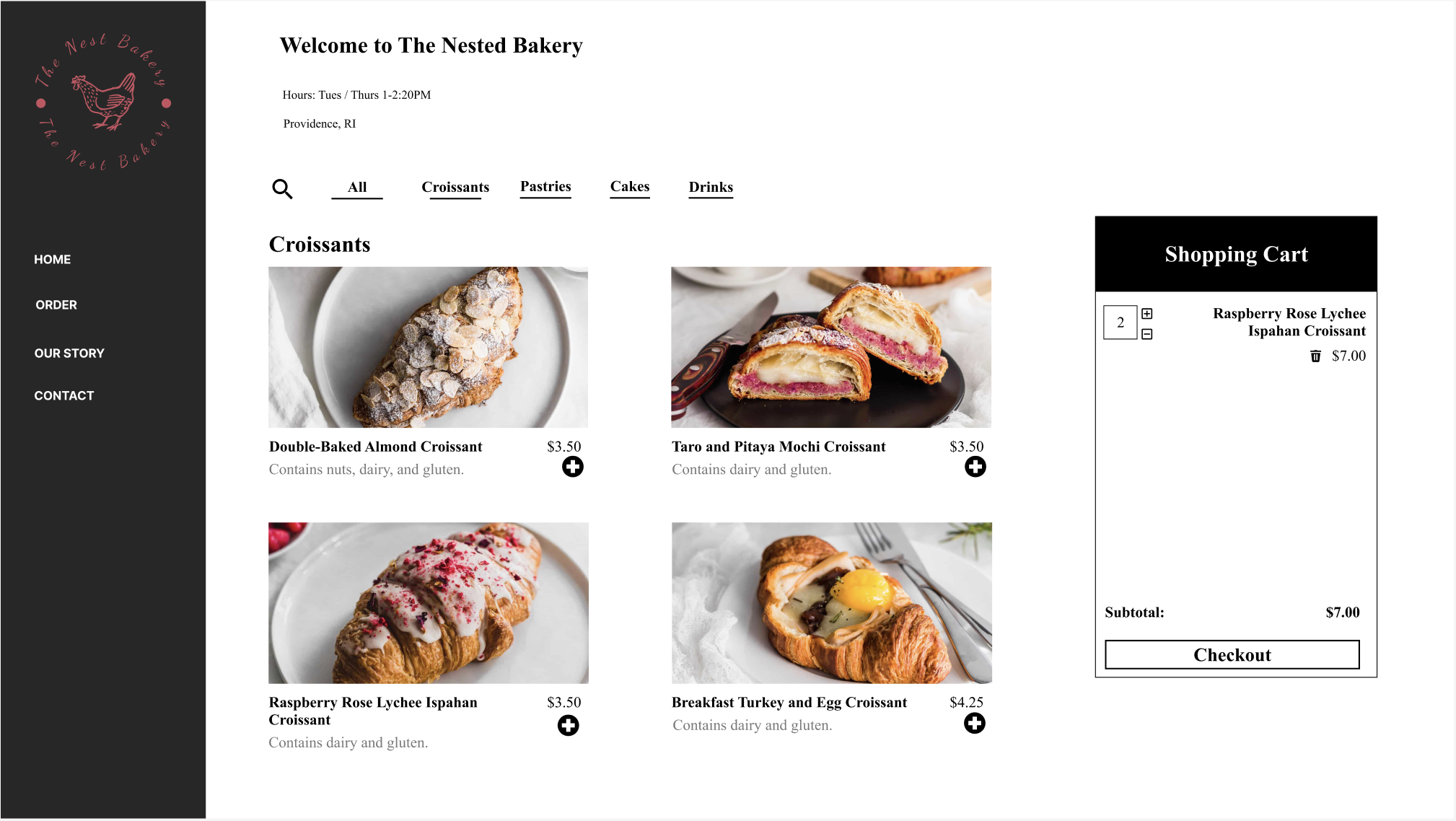

In designing the two iterations, the main question we considered was "how do existing online shops

add and remove items?" We concluded that the most shops did the following or a combination of the two:

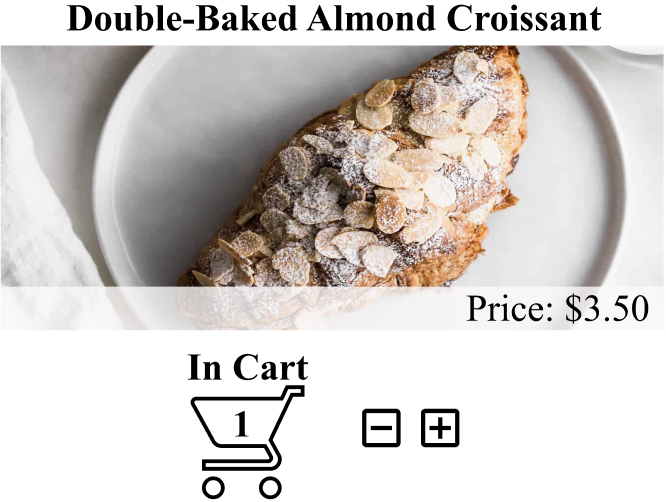

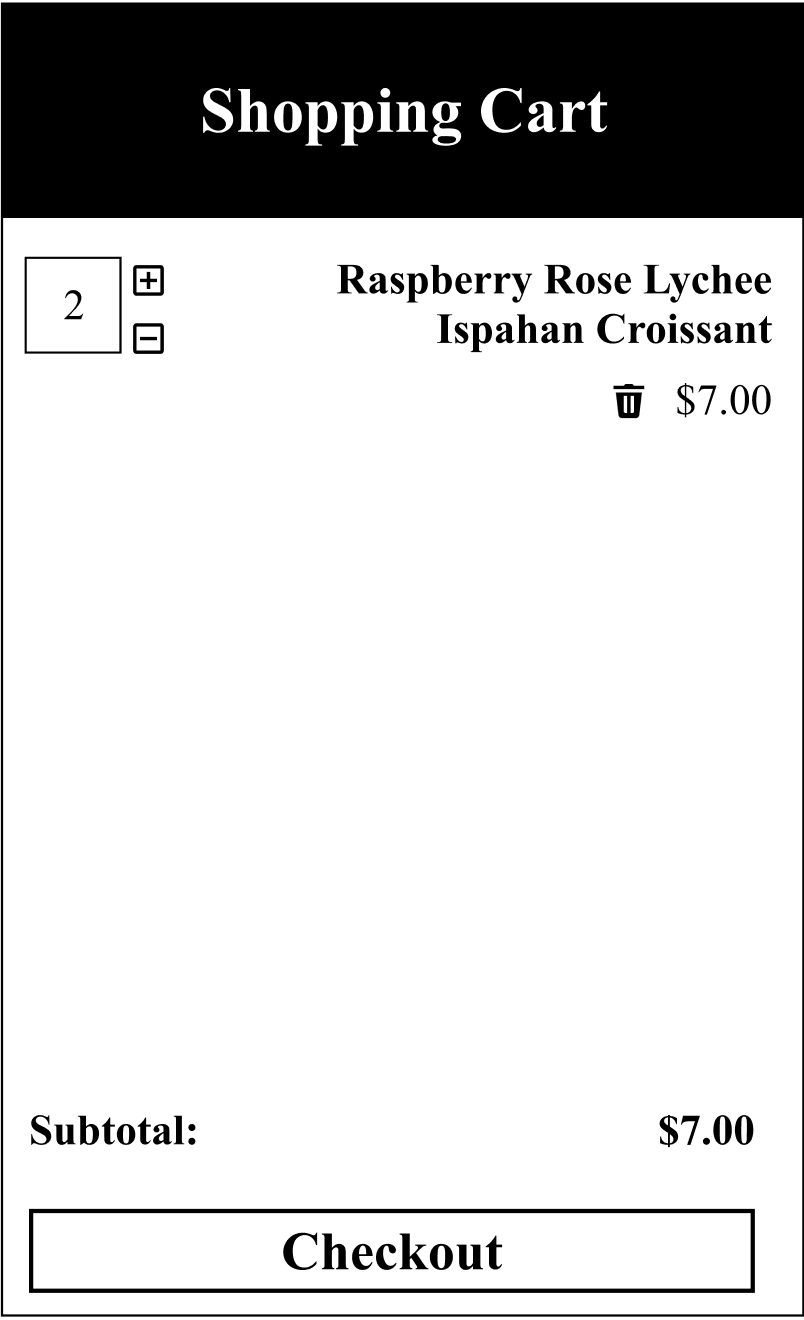

In the first example, items are added and subtracted solely from the item in the gallery. In the second

example, the items can be added and removed from the cart, in addition to being able to be added

from the item card. As such, we chose to implement these two features in our two designs.

The first example is advantageous in that users are able to make a visual connection, instead of a semantic connection,

between what they're adding and the actual item. The tradeoff, however, is that it requires more navigation to

actually remove the item, since users mus renavigate to the item in the gallery to remove it. In the second example, the

vice versa is often the case.

After considering the tradeoffs between these two designs, we drew up these two designs:

Version A imlements cart editing through strictly the item cards and Version B implements

card editing through both the card items and the cart itself.

User Testing

The next question we sought to answer was which version is better? In testing this

hypothesis, we used or metric task that required users to remove all of the items in the cart. Based on

this metric, we hypothsized that version b, the version where cart editing could be done through both the

item cards and the cart itself, would perform better.

In testing this hypothesis, we had two users execute the task on each design. While users

completed the task, we mapped their eye movements to see how efficiently they would complete the task. We then

generated heat maps based on their eye movements, detecting the areas of the screen where they looked at most. Here's the

combined data for both users:

.jpeg)

.jpeg)

In analyzing these results, we can clearly see that the number of data points, which is correlated to the duration of time taken to complete the task, is significantly higher in version A. From this, we can conclude that version B performs better when measured by efficiency.

Conclusions

This project really allowed me to utilize my skills as a scientist! I really enjoyed the process

of being able to form a hypothesis and use AB Testing to test that hypothesis; it's something that I really

haven't gotten much experience with in the computer science world. Apart from this reflection, however, this

project was also really cool because of the eye-tracking technology we were able to use.

Perhaps most importantly, however, is that I was able to see, first hand, the effects of good design

when usability is taken into consideration. Clearly, version A was designed with logic, but without the

usability considerations necessary to optimize the experience, but it was evident that

usability considerations had considerable effects in optimizing the user experience in version b. As such,

this project was paramount in enforcing the importance of usability-centered design.